Approximate Inference

I am currently working on quantifying the quality of certain approximate inference methods utilizing Bayesian Networks. The two methods that I am currently researching are Likelihood Weighting (LW) and Markov Chain Monte Carlo (MCMC).

Updates

The latest version of the program features estimated P(e) based on the LW algorithm. The LW and MCMC algorithms have also been optimized. The LW algorithm is now about 1.5x to 2x faster than it was. This is due to the new CPT class which is based on a hashing values in a single float array. Previously the program used Java Reflected arrays. MCMC is also slightly faster than it was, but this is only a marginal improvement. Also with the new CPT class, CPTs can now be printed.

Code

A gzipped tar file of the code is available here [24KB].

Preliminary Results

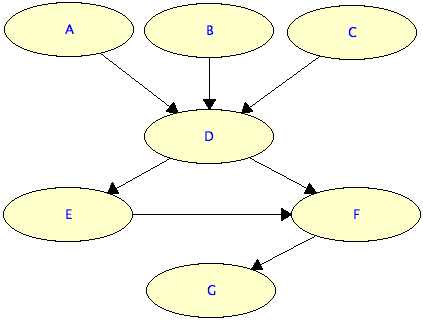

I've done some preliminary calculations to see how fast my LW / MCMC application works. Each one can calculate an approximate distribution from 1,000,000 samples on a 7 node network in about 3 to 4. With 10,000,000 samples, it takes LW 45 seconds and MCMC 32 seconds. These times include file-reading time, proprocessing time and computation time. Both methods give very similar results.

Updated Results (05.20.06)

Both LW and MCMC can calculate an approximate distribution from 1,000,000 samples on a 7 node network in about 2 to 2½ seconds. With 10,000,000 samples, it takes LW 17 seconds and MCMC 13 seconds. Just like before these times include file-reading time, proprocessing time and computation time. They now also include the time it takes to calculate P(e). Prolonged running of the program indicates that LW can compute 631,000 samples / second and MCMC can compute 778,000 samples / sec.

Comparison to SamIam

I noticed after comparing results to SamIam results that LW gives approximation results similar to those found with the Recursive Conditioning, Shenoy-Shafer, Hugin and ZC–Hugin algorithms. MCMC gives approximation results similar to those found with SamIam using the Belief Propagation algorithm.

Sample Network

Sample Input

51: bin/ > java -classpath . com/deak/thesis/ThesisMain "LW" "1000000"

"/Users/deak/Desktop/example3.net" "B" "b1" "A" "a1"

52: bin/ > java -classpath . com/deak/thesis/ThesisMain "MCMC" "1000000"

"/Users/deak/Desktop/example3.net" "/Users/deak/Desktop/evidence.inst"

Sample Output

Setting variable "B" to value "b1"

Setting variable "A" to value "a2"

Estimated P(e): 0.03004763125

E: 0.5 0.5

F: 0.5 0.5

G: 0.5 0.5

|

Running Likelihood Weighting with 1000000 samples. | SamIam results with Recursive Conditioning

| --------------------------------------------

A: 0.0 1.0 0.0 | A: 0.0 1.0 0.0

C: 0.9356 0.0644 | C: 0.9350 0.0650

B: 1.0 0.0 0.0 0.0 | B: 1.0 0.0 0.0 0.0

D: 0.0417 0.0509 0.0281 0.0375 0.0471 0.7948 | D: 0.0418 0.0512 0.0280 0.0374 0.0468 0.7948

E: 0.4997 0.5003 | E: 0.5 0.5

F: 0.4999 0.5001 | F: 0.5 0.5

G: 0.5 0.5 | G: 0.5 0.5

|

Run Time: 2.323 Seconds

Setting variable "B" to value "b1"

Setting variable "A" to value "a2"

Estimated P(e): 0.0305217185

Running MCMC with 1000000 samples.

A: 0.0 1.0 0.0

C: 0.9345 0.0655

B: 1.0 0.0 0.0 0.0

D: 0.0418 0.0517 0.0281 0.037 0.0465 0.795

E: 0.4996 0.5004

F: 0.4999 0.5001

G: 0.5006 0.4994

Run Time: 1.997 Seconds